Practical use of generics in Java

Dive into the world of Java generics as we transform a simple key-value store to support any data type! This post breaks down the process of refactoring code for flexibility, covering the hurdles I faced along the way. Perfect for developers wanting to level up their designs with generics! 💡

Intro

When I wrote the first Building a Database post about creating a key-value store, I used String for both keys and values. This choice made sense at the time—strings are easy to work with, and since my main goal was to learn and understand the underlying algorithms, it served its purpose well. However, in real-world applications, a system limited to storing only strings isn't practical. Users need the flexibility to store various types of data. To address this, I refactored the application to support generics, allowing it to handle any key-value type. In this post, I'll introduce Java generics and share my experiences and challenges in making my database more flexible by refactoring it to use generics.

Brief introduction to generics in Java

Generics provide a way for us to handle types used in our code as parameters and create classes and methods that can work on various types. Think List for example. You have to provide the type parameter when you create a List and you can create Lists for various types like List<Integer>, List<String> etc.

Things you can do with Java generics

You can use wildcards ? to allow generic methods and classes to handle unknown types, making code more flexible.

List<?> c = new ArrayList<Integer>();You can add upper and lower bounds to the wildcards. As the name suggests with these you can restrict the types the clients of your code can provide.

public <T extends Number> void printDouble(T num) {

System.out.println(num.doubleValue());

}

printDouble(10); // Works with Integer

printDouble(5.5); // Works with Double

printDouble("Hello"); // Compilation error! Not a Number

Upper bound (extends)

With an upper bound you limit the types to be the subclasses of the type you name. In the above example T could be Integer or Double but not Object or String.

public static void addNumbers(List<? super Integer> list) {

list.add(10);

list.add(20);

}Lower bound (super)

With a lower bound you limit the parameter to be a given type or one of its super types. In the example it could be List<Integer>, List<Number> or even List<Object> but not List<String>.

You can also add multiple bounds of the same type with the & character like T extends Comparable<T> & Serializable

Type erasure

We also have to mention type erasure. Type erasure is the process by which Java removes generic type information during compile-time, replacing type parameters with their upper bounds (or Object if no bound is specified). This ensures backward compatibility with older Java versions that do not support generics. In practice the following code you write

class Box<T extends Number> {

private T value;

public void set(T value) { this.value = value; }

public T get() { return value; }

}Will be translated to this by the compiler:

class Box {

private Number value; // T is erased to Number

public void set(Number value) { this.value = value; }

public Number get() { return value; }

}Type erasure adds limitations to the use of generics, mostly because we don't have type information during runtime.

List<Integer> intList = new ArrayList<>();

List<Float> floatList = new ArrayList<>();

if (intList.getClass() == floatList.getClass()) { // true

System.out.println("They look the same to me!")

}It is not possible to create generic arrays.

T[] array = new T[10]; // Compilation error!We cannot instantiate generic types.

class Container<T> {

T instance = new T(); // Compilation error!

}Overloading can be troublesome as well.

interface Printer {

void print(List<String> list) { }

void print(List<Integer> list) { } // Compilation error! Same erased type

}Advantages of generics

- Code Reusability: One of the biggest advantages of generics is the ability to write reusable code that works with different types. Instead of creating multiple versions of the same class or method for different data types, generics allow you to define it once and use it with any type.

- Type Safety: Compile-time type checking prevents runtime errors, making code more robust. Just think about using the raw version of List and having to cast all values when you fetch something from it.

Disadvantages

- Increased Complexity: Generics introduce additional complexity, especially when using advanced features like wildcards (

? extends,? super). - No Support for Primitives: Generics work only with reference types, requiring the use of wrapper classes (e.g.,

Integer,Double) for primitive data types.

Refactoring the database code

In the Build a database series I had an interface with very basic methods like put(String key, String value), get(String key), delete(String key). I wanted to allow users of this interface to freely choose both the key and value types. However, the key type must be comparable since both the LSM implementation and B-Tree rely on ordering elements based on their keys.

I also wanted to keep the interface as simple as possible and put the least amount of coding or configuration to the client.

The implementation

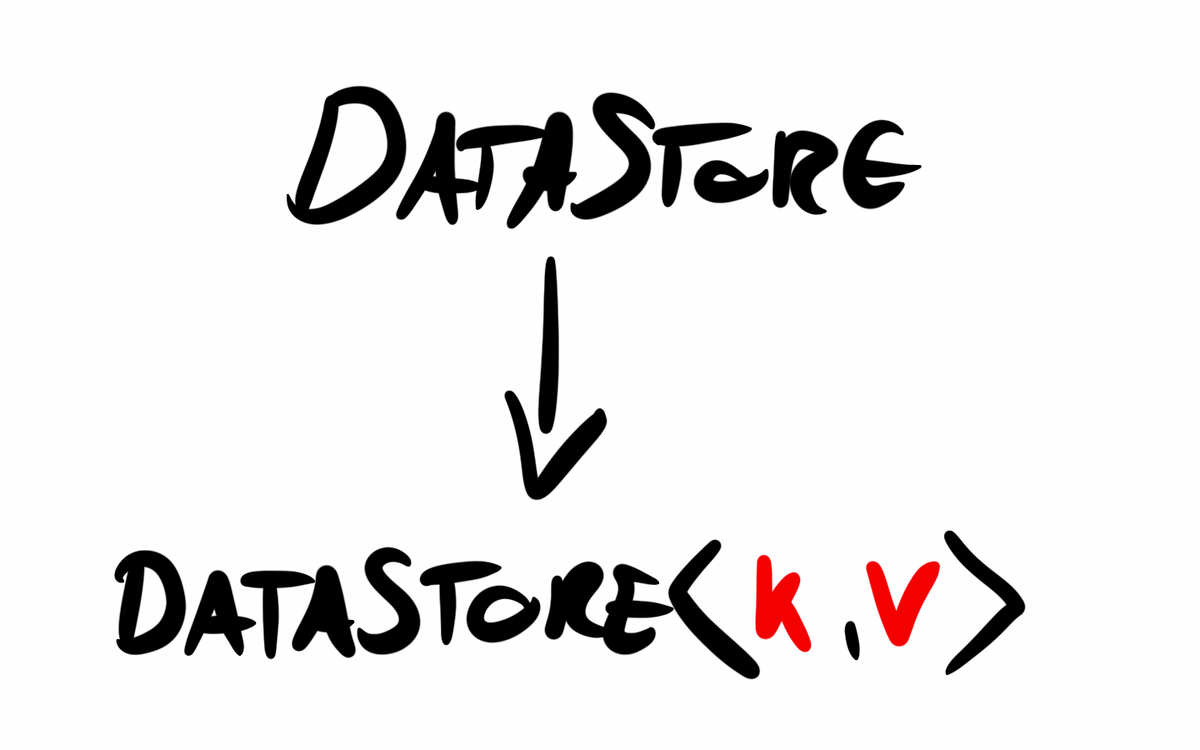

Doing 90% of the change was super easy. I started from the top and changed the DataStore interface first:

public interface DataStore<K extends Comparable<K>, V> {

void put(K key, V value) throws IOException;

Optional<V> get(K key) throws IOException;

void delete(K key) throws IOException;

}This caused a lot of issues in the project as all classes implementing DataStore had to provide the generic type variables. So I started fixing these issues from top to bottom.

public class LsmDataStore<K extends Comparable<K>, V> implements DataStore<K, V> {

private static final long FLUSH_TO_DISK_LIMIT = 5;

private final CommitLog<K,V> commitLog;

private final Memtable<K, V> memtable;

private final SSTableManager<K, V> ssTableManager;

...With the B-Tree this went very smoothly and I had no difficulties, however there were challenging parts in the Log-Structured Merge Tree implementation.

Serialization

In my implementation, the B-Tree is not persisted to disk, but the LSM tree is. I chose to store the SSTable and index files as plain text to make the storage human-readable, which helps with learning and understanding how the system works. When both the key and value were fixed as Strings, this was straightforward. However, allowing arbitrary types made things more challenging. The system needed a way to serialize these types to strings and deserialize them back into Java objects, but there is no universal method for doing this. You can do this with Jackson and convert to JSON probably, but a principle I follow in this repository is not to use third party libraries (as much as possible), so I did not want to add Jackson or another purpose built serialization library. I wanted to solve it with the tools Java has out of the box.

My first idea was to restrict key and value types to implement Serializable and use the Java serialization subsystem.

public interface DataStore<K extends Comparable<K> & Serializable, V extends Serializable> {In this case I could use Java's ObjectOutputStream and ObjectInputStream to write and read the objects to a byte array and store it as BASE64 text.

The pros of this approach would have been:

- I can provide a generic serialization-deserialization solution, the client only has to make sure that the classes they use are serializable

- Builds on built-in Java capabilities

That sounds pretty nice, but there are cons as well:

- I have to force the client to only use Serializable types, which is an unwanted restriction

- The output would be a BASE64 string which is String yes, but not readable at all. I could create binary files at that point, it would be just as "easy" to understand by opening the file in an editor

Another option, which requires a bit more work from clients but offers greater flexibility, is to have them provide their own SerDe implementations for the types they use. I don’t prefer this approach because it exposes implementation details to the client, which is not good design. Clients would need to instantiate two LsmSerDe objects for their key and value types to handle serialization and deserialization from strings. However, the fact that the database stores data as strings should be irrelevant to them. They also wouldn't know that I use :: as a separator between keys and values on the storage layer, and nothing would prevent them from serializing their data with many :: in it, potentially causing conflicts. It’s not an ideal solution, but it’s the best I’ve come up with so far.

public interface SerDe<T> {

T deserialize(String input);

String serialize(T input);

}The SerDe interface

The interface is simple.

public class LsmSerDe<T> implements SerDe<T> {

private static final String TOMBSTONE = "<TOMBSTONE>";

public static final String SEPARATOR = "::";

private final Function<String, T> deserializer;

private final Function<T, String> serializer;

public LsmSerDe(Function<String, T> deserializer, Function<T, String> serializer) {

this.deserializer = deserializer;

this.serializer = serializer;

}

@Override

public T deserialize(String input) {

if (TOMBSTONE.equalsIgnoreCase(input)) {

return null;

}

return deserializer.apply(input);

}

@Override

public String serialize(T input) {

if (input == null) {

return TOMBSTONE;

}

String result = serializer.apply(input);

if (result == null || result.contains(SEPARATOR)) {

throw new IllegalArgumentException("Illegal character in serialized object: " + SEPARATOR);

}

return result;

}

}SerDe implementation

The basic implementation just applies the provided functions. It makes sure that the serialized string will not contain the separator used in the data files. There is also some magic here with the tombstones that I'll talk about a bit later.

public LsmDataStore(SerDe<K> keySerDe, SerDe<V> valueSerDe) throws IOException {

this.commitLog = new DefaultCommitLog<>(keySerDe, valueSerDe);

if (this.commitLog.getSize() > 0) {

this.memtable = new Memtable<>(this.commitLog.readCommitLog());

} else {

this.memtable = new Memtable<>();

}

ssTableManager = new SSTableManager<>(keySerDe, valueSerDe);

ssTableManager.readTablesFromFile();

}The client provided SerDes are passed down to the classes where file writes and reads actually happen.

While I don't necessarily like this SerDe approach for the above mentioned issues, keeping the data storage files human readable is a must have, and with this restriction I think this is the best solution. The goal of my databases project is to learn and understand.

Deleting values

In an LSM we use a constant value to mark deleted items called tombstone. It is easy to define a constant for String type, but for a generic one it gets difficult. There is no value other than null that can be used in place for any arbitrary type to be a constant.

Since I keep my storage layer String based I'd like to keep the tombstone as a String constant too. This was a bit tricky to figure out, but as you can see above I ended up converting null values with the SerDes to this tombstone constant and back to null.

Summary

In this post I gave a brief intro into what you can do with them and how I applied generics to an existing project. In spite of some of the limitations type erasure brings, generics are a very powerful tool, and it is good to have working knowledge on how to use them.

Some sources I used for this post:

The code is available on GitHub.